Webmaster tarafından Google Reader ile size gönderildi:

This confusion lies in the common belief that there are two ways for optimizing the link-based popularity of your website: Either the meritocratic and long-term option of developing natural links or the risky and short-term option of non-earned backlinks via link spamming tactics such as buying links. We've always taken a clear stance with respect to manipulating the PageRank algorithm in our Quality Guidelines. Despite these policies, the strategy of participating in link schemes might have previously paid off. But more recently, Google has tremendously refined its link-weighting algorithms. We have more people working on Google's link-weighting for quality control and to correct issues we find. So nowadays, undermining the PageRank algorithm is likely to result in the loss of the ability of link-selling sites to pass on reputation via links to other sites.

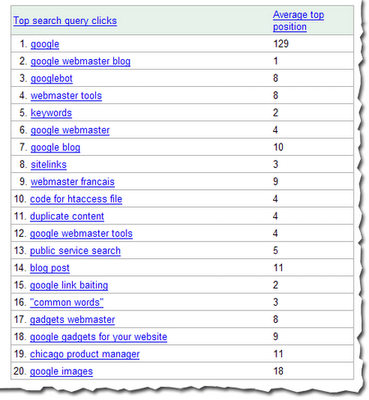

Discounting non-earned links by search engines opened a new and wide field of tactics to build link-based popularity: Classically this involves optimizing your content so that thematically-related or trusted websites link to you by choice. A more recent method is link baiting, which typically takes advantage of Web 2.0 social content websites. One example of this new way of generating links is to submit a handcrafted article to a service such as http://digg.com. Another example is to earn a reputation in a certain field by building an authority through services such as http://answers.yahoo.com. Our general advice is: Always focus on the users and not on search engines when developing your optimization strategy. Ask yourself what creates value for your users. Investing in the quality of your content and thereby earning natural backlinks benefits both the users and drives more qualified traffic to your site.

To sum up, even though improved algorithms have promoted a transition away from paid or exchanged links towards earned organic links, there still seems to be some confusion within the market about what the most effective link strategy is. So when taking advice from your SEO consultant, keep in mind that nowadays search engines reward sweat-of-the-brow work on content that bait natural links given by choice.

In French / en Francais

Liens et popularité.

[Translated by] Eric et Adrien, l'équipe de qualité de recherche.

Les 28 et 29 Novembre dernier, nous étions à Paris pour assister à SES. Nous avons eu la chance de rencontrer les acteurs du référencement et du Web marketing en France. L'un des principaux points qui a été abordé au cours de cette conférence, et sur lequel il règne toujours une certaine confusion, concerne l'utilisation des liens dans le but d'augmenter la popularité d'un site. Nous avons pensé qu'il serait utile de clarifier le traitement réservé aux liens spam par les moteurs de recherche.

Cette confusion vient du fait qu'un grand nombre de personnes pensent qu'il existe deux manières d'utiliser les liens pour augmenter la popularité de leurs sites. D'une part, l'option à long terme, basée sur le mérite, qui consiste à développer des liens de manière naturelle. D'autre part, l'option à court terme, plus risquée, qui consiste à obtenir des liens spam, tel les liens achetés. Nous avons toujours eu une position claire concernant les techniques visant à manipuler l'algorithme PageRank dans nos conseils aux webmasters.

Il est vrai que certaines de ces techniques ont pu fonctionner par le passé. Cependant, Google a récemment affiné les algorithmes qui mesurent l'importance des liens. Un plus grand nombre de personnes évaluent aujourd'hui la pertinence de ces liens et corrigent les problèmes éventuels. Désormais, les sites qui tentent de manipuler le Page Rank en vendant des liens peuvent voir leur habilité à transmettre leur popularité diminuer.

Du fait que les moteurs de recherche ne prennent désormais en compte que les liens pertinents, de nouvelles techniques se sont développées pour augmenter la popularité d'un site Web. Il y a d'une part la manière classique, et légitime, qui consiste à optimiser son contenu pour obtenir des liens naturels de la part de sites aux thématiques similaires ou faisant autorité. Une technique plus récente, la pêche aux liens, (en Anglais « link baiting »), consiste à utiliser à son profit certains sites Web 2.0 dont les contenus sont générés par les utilisateurs. Un exemple classique étant de soumettre un article soigneusement prépare à un site comme http://digg.com. Un autre exemple consiste à acquérir un statut d'expert concernant un sujet précis, sur un site comme http://answers.yahoo.com. Notre conseil est simple : lorsque vous développez votre stratégie d'optimisation, pensez en premier lieu à vos utilisateurs plutôt qu'aux moteurs de recherche. Demandez-vous quelle est la valeur ajoutée de votre contenu pour vos utilisateurs. De cette manière, tout le monde y gagne : investir dans la qualité de votre contenu bénéficie à vos utilisateurs, cela vous permet aussi d'augmenter le nombre et la qualité des liens naturels qui pointent vers votre site, et donc, de mieux cibler vos visiteurs.

En conclusion, bien que les algorithmes récents aient mis un frein aux techniques d'échanges et d'achats de liens au profit des liens naturels, il semble toujours régner une certaine confusion sur la stratégie à adopter. Gardez donc à l'esprit, lorsque vous demandez conseil à votre expert en référencement, que les moteurs de recherche récompensent aujourd'hui le travail apporté au contenu qui attire des liens naturels.

Buradan şunları yapabilirsiniz:

- Google Reader'ı kullanarak Official Google Webmaster Central Blog sitesine abone olun

- Tüm sık kullanılan sitelerinizle ilgili güncel bilgileri kolayca almak için Google Reader'ı kullanmaya başlayın